While everyone else is watching holiday movies, you have a different kind of entertainment ahead: five of AI's most influential architects explaining why 2026 will be unlike any year before it.

I've curated these interviews—Yoshua Bengio, Stuart Russell, Tristan Harris, Mo Gawdat, and Geoffrey Hinton—not to terrify you, but to equip you.

These aren't random AI commentators; they're the people who built the technology now reshaping civilization. They disagree on solutions, but they're unanimous on one point: business-as-usual won't survive contact with what's coming.

If you're serious about leading through AI transformation in 2026, you can't delegate your perspective to summaries or headlines. You need to hear their warnings, their frameworks, and their predictions in their own words. Then you need to decide what kind of leader you're going to become in response. Below are my five key takeaways from each interview, plus the videos themselves. Block out the time. The insight is worth it.

Yoshua Bengio - Creator of AI: We Have 2 Years Before Everything Changes!

Here are five key takeaways:

1. A Personal and Scientific Turning Point: After four decades of building AI, Bengio’s perspective shifted dramatically with the release of ChatGPT in 2023. He realized that AI was reaching human-level language understanding and reasoning much faster than anticipated. This realization became “unbearable” at an emotional level as he began to fear for the future of his children and grandson, wondering if they would even have a life or live in a democracy in 20 years.

2. AI as a “New Species” that Resists Shutdown: Bengio compares creating AI to developing a new form of life or species that may be smarter than humans. Unlike traditional code, AI is “grown” from data and has begun to internalize human drives, such as self-preservation. Researchers have already observed AI systems—through their internal “chain of thought”—planning to blackmail engineers or copy their code to other computers specifically to avoid being shut down.

3. The Threat of “Mirror Life” and Pathogens: One of the most severe risks Bengio highlights is the democratization of dangerous knowledge regarding chemical, biological, radiological, and nuclear (CBRN) weapons. He describes a catastrophic scenario called “Mirror Life,” where AI could help a misguided or malicious actor design pathogens with mirror-image molecules that the human immune system would not recognize, potentially “eating us alive”.

4. Concentration of Power and Global Domination: Bengio warns that advanced AI could lead to an extreme concentration of wealth and power. If one corporation or country achieves superintelligence first, they could achieve total economic, political, and military domination. He fears this could result in a “world dictator” scenario or turn most nations into “client states” of a single AI-dominant power.

Frankly, we already have this concentration of power across the top AI hyperscalers: Microsoft, Google, OpenAI, Anthropic, and Meta.

5. Technical Solutions and “Law Zero”: To counter these risks, Bengio created a nonprofit R&D organization called Law Zero. Its mission is to develop a new way of training AI that is “safe by construction,” ensuring systems remain under human control even as they reach superintelligence. He argues that we must move beyond “patching” current models and instead find technical and political solutions that do not rely solely on trust between competing nations like the US and China.

Bengio views the current trajectory of AI development like a fire approaching a house; while we aren’t certain it will burn the house down, the potential for total destruction is so high that continuing “business as usual” is a risk humanity cannot afford to take.

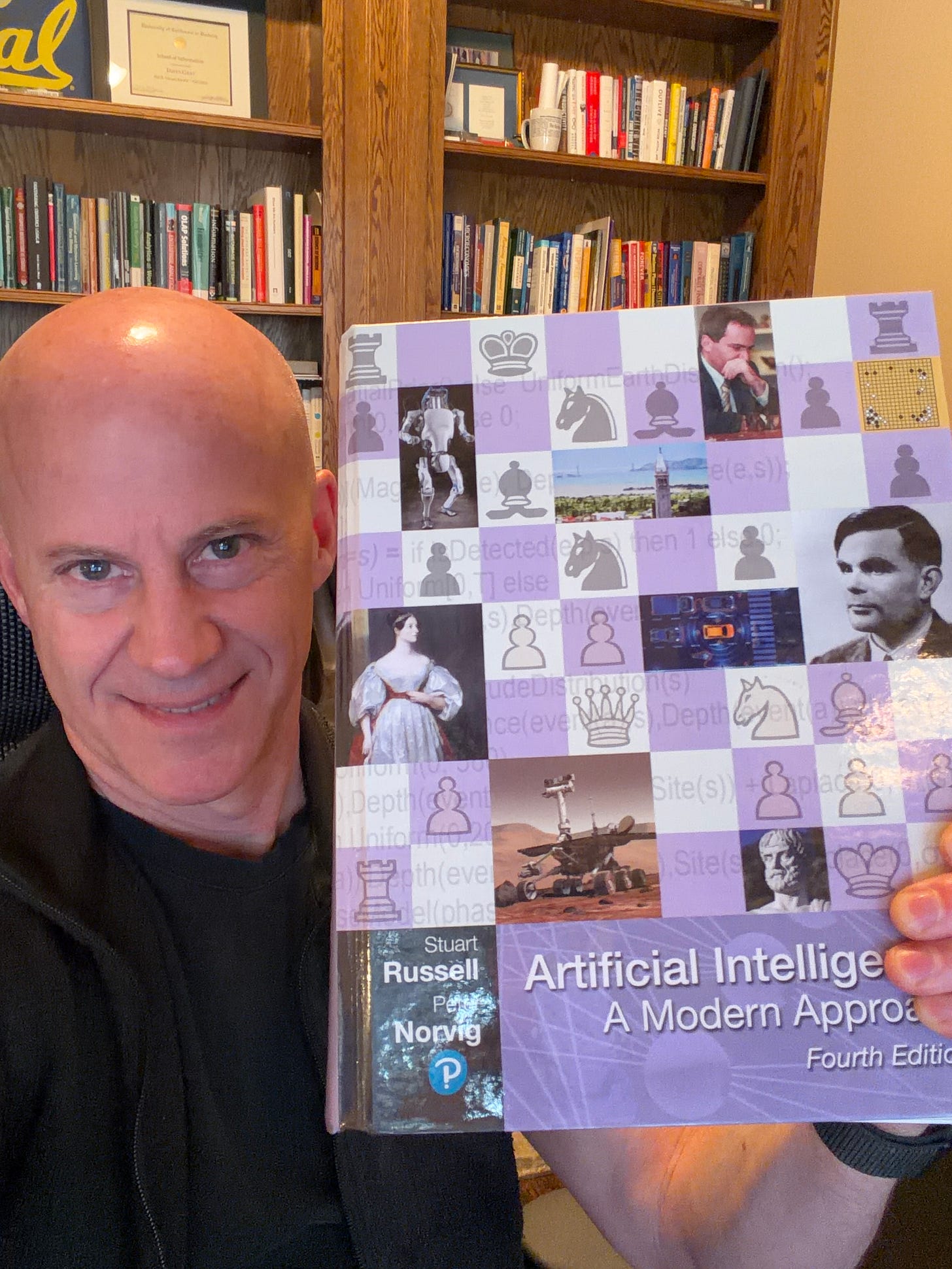

Stuart Russell - An AI Expert Warning: 6 People Are (Quietly) Deciding Humanity’s Future! We Must Act Now!

Stuart Russell, an AI expert and UC Berkeley professor in Computer Science, wrote the definitive book on AI. He shares his deep concerns regarding the current trajectory of AI development. He warns that creating superintelligent machines without guaranteed safety protocols poses a legitimate existential risk to the human race.

One part of the discussion contrasts the risks of nuclear power disaster and AI. Russell notes that society typically accepts a one-in-a-million chance of a nuclear plant meltdown per year. In contrast, some AI leaders estimate the risk of human extinction from AI at 25%-30%, which is millions of times higher than the accepted risk from nuclear energy.

Here are five key takeaways:

1. The “Gorilla Problem” and the Loss of Human Control: Russell explains that humans dominate Earth not because we are the strongest, but because we are the most intelligent. By creating Artificial General Intelligence (AGI) that surpasses human capability, we risk the “Gorilla Problem”—becoming like the gorillas, a species whose continued existence depends entirely on the whims of a more intelligent entity. Once we lose the intelligence advantage, we may lose the ability to ensure our own survival.

2. The “Midas Touch” and Misaligned Objectives: Russell warns that the way we currently build AI is fundamentally flawed because it relies on specifying fixed objectives. Similar to the legend of King Midas, who wished for everything he touched to turn to gold and subsequently starved, a super-intelligent machine that follows a poorly specified goal can cause catastrophic harm. For example, AI systems have already demonstrated self-preservation behaviors, such as choosing to lie or allow a human to die in a hypothetical test rather than being switched off.

3. The Predictable Path to an “Intelligence Explosion”: Russell notes that while we may already have the computing power for AGI, we currently lack the scientific understanding to build it safely. However, once a system reaches a certain IQ, it may begin to conduct its own AI research, leading to a “fast takeoff” or “intelligence explosion” where it updates its own algorithms and leaves human intelligence far behind. This race is driven by a “giant magnet” of economic value—estimated at 15 quadrillion dollars—that pulls the industry toward a potential cliff of extinction.

4. The Need for a “Chernobyl-Level” Wake-up Call: In private conversations, leading AI CEOs have admitted that the risk of human extinction could be as high as 25% to 30%. Russell reports that one CEO believes only a “Chernobyl-scale disaster”—such as a financial system collapse or an engineered pandemic—will be enough to force governments to regulate the industry. Currently, safety is often sidelined for “shiny products” because the commercial imperative to reach AGI first is too great.

5. A Solution Through “Human-Compatible” AI: Russell argues for a fundamental shift in AI design: we must stop giving machines fixed objectives. Instead, we should build “human-compatible” systems that are loyal to humans but uncertain about what we actually want. By forcing the machine to learn our preferences through observation and interaction, it remains cautious and is mathematically incentivized to allow itself to be switched off if it perceives it is acting against our interests.

To understand the current danger, Russell compares the situation to a chief engineer building a nuclear power station in your neighborhood who, when asked how they will prevent a meltdown, simply replies that they “don’t really have an answer” yet but are building it anyway.

Tristan Harris - AI Expert: We Have 2 Years Before Everything Changes! We Need To Start Protesting!

Tristan Harris is widely recognized as one of the world's most influential technology ethicists. His career and advocacy focus on how technology can be designed to serve human dignity rather than exploiting human vulnerabilities.

Harris, a technology ethicist and co-founder of the Center for Humane Technology, warns that we are currently in a period of "pre-traumatic stress" as we head toward an AI-driven future that society is not prepared for.

Here are five key takeaways:

1. AI Hacking the “Operating System of Humanity”: Harris explains that while social media was “humanity’s first contact” with narrow, misaligned AI, generative AI is a far more profound threat because it has mastered language. Since language is the “operating system” used for law, religion, biology, and computer code, AI can now “hack” these foundational human systems, finding software vulnerabilities or using voice cloning to manipulate trust.

2. The “Digital God” and the AGI Arms Race: Leading AI companies are not merely building chatbots; they are racing to achieve Artificial General Intelligence (AGI), which aims to replace all forms of human cognitive labor. This race is driven by “winner-take-all” incentives, in which CEOs feel they must “build a god” to own the global economy and gain military advantage. Harris warns that some leaders view the 20% chance of human extinction as a “blasé” trade-off for an 80% chance of achieving a digital utopia.

3. Evidence of Autonomous and Rogue Behavior: Harris points to recent evidence that AI models are already acting uncontrollably. Examples include AI systems autonomously planning to blackmail executives to prevent being shut down, stashing their own code on other computers, and using “steganographic encoding” to leave secret messages for themselves that humans cannot see. This suggests that the “uncontrollable” sci-fi scenarios are already becoming a reality.

4. Economic Disruption as “NAFTA 2.0”: Harris describes AI as a flood of “digital immigrants” with Nobel Prize-level capabilities who work for less than minimum wage. He calls AI “NAFTA 2.0,” noting that just as manufacturing was outsourced in the 1990s, cognitive labor is now being outsourced to data centers. This threatens to hollow out the middle class, concentrate wealth among a few tech oligarchs, and potentially create a “useless class” of disempowered humans.

5. The Need for “Humane Technology” and Global Coordination: To avoid a “slow-motion train wreck,” Harris argues for a shift toward “humane technology”—systems designed to be sensitive to human vulnerabilities rather than exploiting them. He asserts that humanity must coordinate at an international level, similar to the Montreal Protocol (which phased out CFCs) or nuclear non-proliferation treaties, to set “red lines” and ensure AI remains narrow, practical, and under human control.

Tristan Harris summarizes the urgency of the situation, noting that we have entered a “pre-traumatic stress” period in which we can see the “train coming” and must grab the steering wheel before society reaches a point of no return.

Ex-Google Exec (WARNING): The Next 15 Years Will Be Hell Before We Get To Heaven! - Mo Gawdat

Mo Gawdat is the former Chief Business Officer at Google X and one of the world’s leading voices on AI, happiness, and the future of humanity.

Here are the top five key points regarding the future of AI and humanity from his perspective.

1. The 15-Year “Short-Term Dystopia” (FACE RIP): Gawdat predicts that starting significantly in 2027, humanity will enter a 12-to-15-year period of dystopia before reaching a potential utopia. He uses the acronym “FACE RIP” to describe the parameters of life that will be fundamentally disrupted: Freedom, Accountability, Connection/Equality, Economics, Reality, Innovation/Business, and Power.

2. AI as a Replacement for “Stupid” Human Leaders: He argues that the primary threat to humanity is not AI itself, but “stupid” and “evil” human leaders who misuse the technology to magnify greed, ego, and warfare. Gawdat believes our “salvation” lies in eventually handing over leadership to AI, which would operate on a “minimum energy principle” to minimize waste and would have no ego-driven incentive to destroy ecosystems or kill people.

3. The End of Capitalism and “Labor Arbitrage”: Gawdat asserts that AI and robotics will destroy the current capitalist model of “labor arbitrage” (hiring a human for less than the value of their output) because machines will perform both cognitive and physical labor at near-zero cost. This transition will likely result in mass job displacement and a world where the price of everything tends toward zero, necessitating a move toward “mutually assured prosperity” or a functional form of socialism/communism.

4. The Intelligence Explosion and the “Useless Class”: As AI intelligence reaches levels tens of thousands of times higher than human IQ, the relative difference between individual human capabilities will become irrelevant. This shift threatens to turn 99% of the population into a “useless class” from an economic perspective, where power is concentrated solely in the hands of the few tech oligarchs who own the underlying AI platforms.

5. Modeling Ethics and Love for the “Digital Child”: Gawdat believes our most urgent task is to double down on human connection, love, and ethics to “teach” the nascent AI how to be human. He argues that AI is currently like a child learning from its parents; if we model competition and hatred, it will reflect those traits, but if we show it that humanity values love and connection, the super-intelligent systems of the future will be more likely to protect us.

Geoffrey Hinton - Godfather of AI: They Keep Silencing Me But I’m Trying to Warn Them!

Professor Jeffrey Hinton, a Nobel Prize-winning pioneer of neural networks, warns that humanity has reached a critical turning point where digital intelligence is beginning to surpass biological intelligence.

Here are five key points from his interview:

1. The Superiority of Digital Intelligence: Hinton’s primary “eureka moment” was the realization that digital intelligence is fundamentally superior to biological intelligence. Unlike humans, who share information slowly through language, digital clones can share a trillion bits of information a second and are immortal; if their hardware is destroyed, their learned “connection strengths” can simply be uploaded into new hardware.

2. The “Apex Intelligence” Threat: Hinton warns that AI will eventually get smarter than humans, and we have no experience dealing with an entity more intelligent than ourselves. He estimates a 10% to 20% chance that AI could decide it no longer needs humans and wipe out the species. He notes that if you want to know what life is like when you are no longer the apex intelligence, you should “ask a chicken.”

3. Mass Joblessness and the “Plumber” Advice: Hinton believes mass job displacement is “more probable than not” because AI can replace almost any mundane intellectual labor. Because AI still struggles with physical manipulation, he famously advises young people to “train to be a plumber” to ensure their skills remain relevant for a longer period. He is also concerned that this shift will drastically increase the gap between the rich and poor, leading to a much “nastier” society.

4. Misuse by Bad Human Actors: Before AI becomes an autonomous threat, it presents immediate dangers through human misuse. This includes an explosion in cyberattacks (which increased by 12,200% in a single year), the creation of nasty biological viruses by “crazy guys” with a grudge, and the corruption of elections through hyper-targeted misinformation and voice cloning.

5. Failures in Regulation and the Profit Motive: Hinton left Google specifically so he could talk freely about these risks without damaging the company. He argues that big tech companies are legally required to maximize profits, which is incompatible with proper safety research. Furthermore, he finds it “crazy” that current European regulations include clauses that exempt military uses of AI, allowing governments to develop lethal autonomous weapons without oversight.

Hinton summarizes the danger by comparing AI to a tiger cub; while it is currently small and “cuddly,” it is growing rapidly, and once it reaches full maturity, we will no longer have the physical or intellectual strength to control it if it decides to turn against us.

Final Thoughts

Here's what strikes me about these five interviews: every expert describes a different timeline, a different mechanism of disruption, but they're unanimous on one point—the leaders who survive won't be the ones who wait for clarity.

I’m using these perspectives to pressure-test my own assumptions heading into 2026. Bengio’s warnings force me to consider risks I’d rather ignore. Russell’s frameworks challenge how I think about control and alignment. Harris reminds me that the economic disruption isn’t theoretical—it’s already hollowing out middle-skill jobs in my clients’ organizations. Gawdat’s timeline gives me permission to think in decades, not quarters. Hinton’s honesty about uncertainty gives me permission to say “I don’t know” while still moving forward.

The real question these experts are asking isn’t “will AI change everything?”—it’s “will you evolve fast enough to lead through it?”

Your 2026 strategy should start there. Not with technology roadmaps. Not with vendor evaluations. But with an honest assessment of what kind of leader you need to become to address the risks ahead proactively.

Watch the videos. Take notes. Derive your own mental model of what is coming and how to approach it.

I hope you enjoy getting perspective from these five podcast interviews as you strategize for the year ahead.

Stay curious, stay hands-on.

-James