Data and AI Strategy Weekly - November 24, 2024

Satya Nadella's AI vision, using AI to build time machines, open vs. closed model innovation, personalizing Google Gemini, Claude and Google integration, AI is shaping expertise economics and strategy

Welcome to the Data and AI Strategy Weekly edition of my newsletter, which focuses on data and AI technology and strategies to accelerate business and career innovation.

This edition includes:

Satya Nadella’s vision for Microsoft AI

Google Drive and Docs now integrated into Claude

Insights on gaps between open and closed models

Personalizing interactions with Google Gemini Advanced

Using AI to build time machines

How AI is shaping expertise economics and strategy

Satya Nadella’s Vision for AI: Key Takeaways from Ignite

Satya Nadella's Ignite keynote emphasized a future where AI transforms businesses, improves efficiency, and enhances operating leverage. The keynote highlighted three main platforms that drive this vision: Copilot, Copilot devices, and Copilot as an AI stack.

Security: A Top Priority

Nadella began by stressing the importance of security in the age of AI. The Secure Future initiative, with its principles of “secure by design, secure by default, and secure by operations,” underscores Microsoft’s commitment to continuous improvement in security. This commitment includes partnering with the security community through initiatives like the Zero Day Quest, a hacking event with substantial rewards for securing cloud and AI systems.

The Power of Copilot: A New UI for AI

Copilot, the UI for AI, was positioned as a transformative force, becoming an organizing layer for how work is done. It aims to empower employees with personalized AI assistance, boosting productivity, creativity, and time savings.

Several key announcements were made regarding Copilot:

Copilot Pages: This "artifact of the AI age" allows users to interact with AI and collaborate with others on a rich, interactive canvas. Users can add interactive charts, code blocks, and diagrams, and control content directly from a chat interface. Nadella uses Copilot Pages to prepare for meetings, pulling information from diverse sources and allowing real-time collaboration with his team.

Deeper Integration with Microsoft 365: Copilot's integration into the Microsoft 365 ecosystem was demonstrated across various applications. In Teams, Copilot provides meeting summaries and can answer questions about shared content. Word drafts can be generated from various sources, and PowerPoint presentations can be built from simple prompts. Outlook's "prioritize my inbox" feature uses AI to help users focus on essential messages.

Copilot in Excel: This transformative feature aims to democratize data analysis, enabling users to get insights from Excel spreadsheets using natural language prompts. Nadella compared its potential impact to that of Excel itself, which revolutionized “number-sense” on a large scale. He sees Copilot in Excel with Python as improving “analysis-sense” across the world.

Extending Copilot: Actions and Agents

The keynote emphasized extending Copilot's capabilities through Actions and Agents:

Copilot Actions: These automate repetitive tasks across the M365 system, much like “Outlook rules for the age of AI.” Users can discover and reuse templates for actions.

New Agents: Agents are envisioned as AI teammates with specific roles and permissions. Examples include facilitator agents for meetings, project manager agents for Planner, self-service agents for HR and IT, and built-in agents for SharePoint sites.

Copilot Studio: This tool allows users to create custom Copilot agents by describing them in natural language and connecting them to data sources. The process was likened to creating familiar documents like Word docs or Excel spreadsheets.

Measuring Copilot's Impact: Copilot Analytics

Recognizing the importance of evaluating ROI, Microsoft introduced Copilot Analytics, which helps businesses correlate Copilot usage with key business metrics. For instance, sales managers can track how Copilot usage impacts win rates.

Copilot Devices: AI at the Edge

The keynote shifted to the evolution of devices in the AI era:

Copilot+ PCs: These PCs leverage the cloud and edge computing to deliver a new level of AI-powered experiences.

Windows 365: The cloud PC category saw significant adoption, driven by features like secure streaming of Windows desktops to any device.

Windows 365 Link: This purpose-built device for Windows 365 is admin-less, password-less, and offers enhanced security by default.

Windows Security and Resilience: The keynote underscored the commitment to Windows security with new features in Windows 11 and the introduction of the Windows Resiliency Initiative.

Copilot and the AI Stack: Building Your Own Copilot

Nadella unveiled the third platform, the Copilot and AI stack. This stack exposes the technology behind Microsoft’s AI applications, enabling businesses to build their own copilots and agents.

Several key features were outlined:

Azure as the World's Computer: Nadella highlighted Azure’s global expansion and innovation in sustainable data center construction.

Network Advancements: Hollow core fiber technology promises speed, bandwidth, and power efficiency breakthroughs.

Azure Local: This service extends Azure to edge locations, enabling businesses to run mission-critical AI workloads across hybrid, multi-cloud, and edge environments.

Silicon Innovation: The keynote discussed advancements in silicon, including the Cobalt 100 VMs, Azure Integrated HSM security chip, and the introduction of Azure Boost with an in-house DPU.

Data for AI: Microsoft Fabric, introduced earlier, is a unified data platform with advancements like the integration of SQL Server. This platform enables businesses to manage operational and analytical data in a single place. The introduction of DiskANN technology to Azure databases also enhanced vector search capabilities.

Azure AI Foundry: This app server for the AI age unifies models, tools, safety, and monitoring solutions to empower AI app development. It is the new name for Azure AI Studio, which Microsoft has rebranded. Microsoft has expanded its capabilities and unified various AI development tools and services under one platform.

Model Diversity and Tools: Foundry boasts a vast model catalog, including open-source models and specialized industry models. New tools were introduced to facilitate model experimentation, data preparation, training, and evaluation.

Agent Service: This service allows developers to create AI agents that can automate business processes and take actions using Logic Apps connectors.

Management Capabilities: AI Foundry includes new management features such as AI Reports for documentation and evaluation results, as well as enhanced safety measures like Prompt Shields and image content evaluations.

GitHub Copilot: GitHub Copilot was highlighted as the leading AI developer tool. New features like Copilot Edits and Workspaces enhance its capabilities, making it an “agentic AI-native IDE.” The future of Copilot includes agents for tasks beyond code writing, encompassing testing, deployment, and performance engineering.

AI for Science: Transforming Research

Nadella emphasized the transformative power of AI in scientific research:

Dynamic Prediction: AI is moving beyond static predictions to modeling dynamic systems, like protein behavior, opening doors to accelerated drug discovery and material science advancements.

Quantum Computing: The quest for reliable qubits continues with advancements in Azure Quantum, achieving a new record of 24 logical qubits with Atom Computing. This progress paves the way for scientific quantum advantage and tackling complex challenges beyond the capabilities of classical computing.

Empowering People with AI Skills

Nadella closed by reiterating Microsoft's mission to empower people and organizations with AI. Initiatives like training programs in AI and digital skills aim to equip individuals with the necessary knowledge to thrive in the AI-powered future.

Satya Nadella's Ignite keynote vividly depicts an AI-driven future where businesses leverage powerful tools and platforms to enhance efficiency, drive innovation, and transform scientific research. The key announcements underscore Microsoft’s focus on security, accessibility, and empowering individuals and organizations to unlock the full potential of AI.

Credit: This content was curated using content generated by Google NotebookLM and the YouTube transcript.

Google Drive and Docs Integrated into Claude

Anthropic added a new feature in Claude to quickly and easily add Google Docs into your prompts. I find it a bit clunky right now to search for GDocs, but I assume this will become streamlined in time.

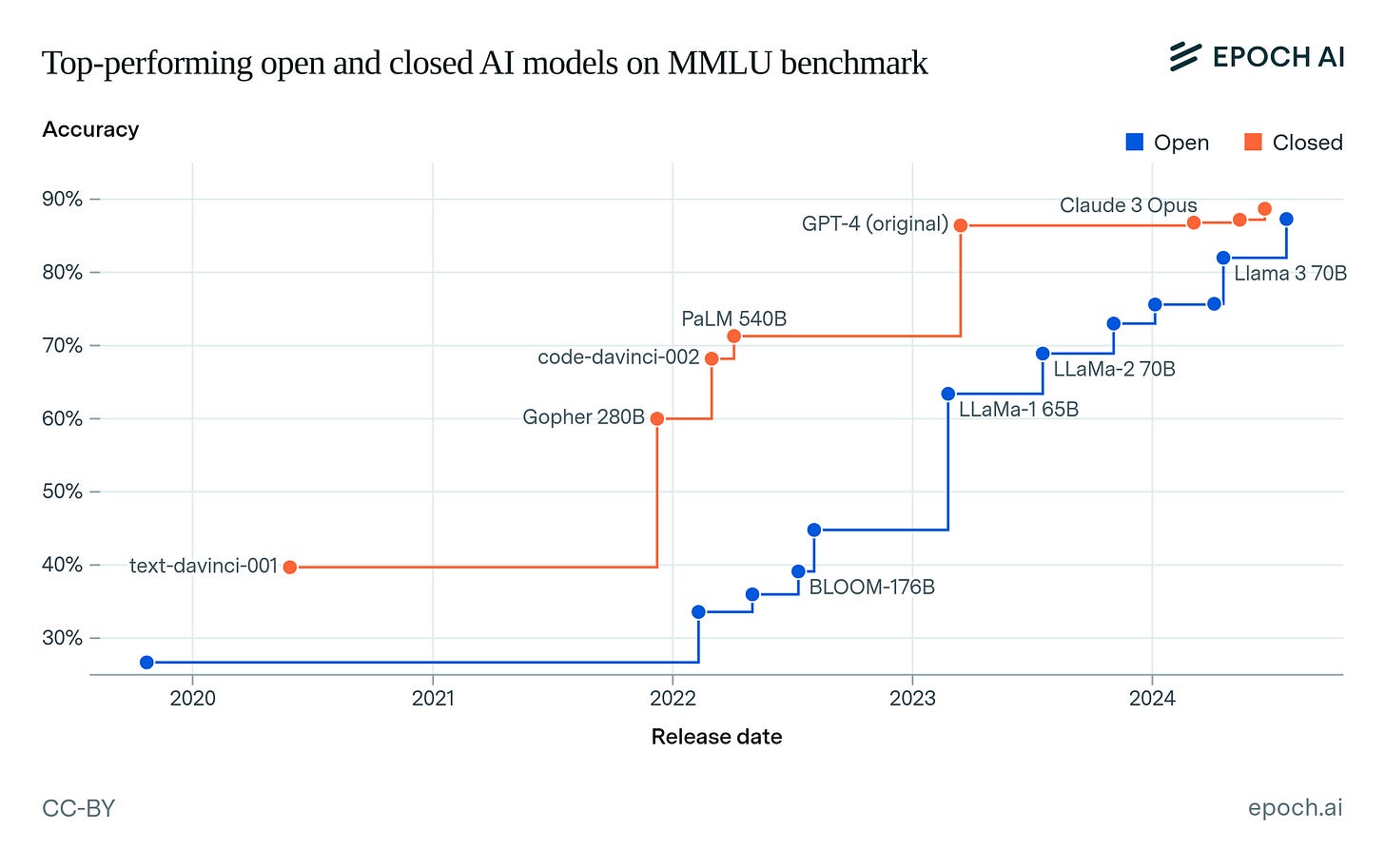

Gap Between The Best Open and Closed Models Expected to Shorten Next Year

Epoch.AI recently published a report, “How Far Behind Are Open Models?”, that compared open and closed AI models and how openness has evolved.

I have summarized a few nuggets from the report that you should find useful.

What is an Open AI Model?

The term “open” and proprietary models are widely used, but its helpful to have a more precise definition.

An “open” AI model is one where certain elements of its development are made accessible to the public. This often refers to open-weight models, meaning the trained model’s “weights” (numerical parameters fine-tuned during training) are available for anyone to download and use, provided they have the necessary computing resources. These weights are critical because they enable the model to function and can be further fine-tuned for specialized tasks.

To break it down:

Weights: These are like the brain of the AI. If the weights are open, you can take the model and use it or adapt it to your needs.

Code: Training code sets up and runs the training process. Open access to this allows others to recreate the model.

Data: The dataset used to train the model is essential for anyone attempting to reproduce it.

While having open weights makes a model “open-weight,” some argue this isn’t the same as being fully “open-source.” For software, open-source means the code is fully transparent and modifiable. However, AI model weights (essentially large lists of numbers) don’t offer the same level of transparency or ease of modification.

That said, open-weight models can still be adapted or fine-tuned, even if their training code and data aren’t available. This practicality has fueled much of the discussion around AI openness. The Open Source Initiative recently defined open-source AI as requiring openness across all three elements: weights, code, and a sufficiently detailed description of the training data.

For simplicity, when we refer to “open models,” we mean models with open weights, regardless of whether the training code or data is also accessible.

Key Take Aways from the Epoch.AI Study

In terms of benchmark performance, the best open large language models (LLMs) have lagged the best closed LLMs by 5 to 22 months.

In terms of training compute, the largest open models have lagged behind the largest closed models by about 15 months.

It is unclear whether open LLMs use training compute more or less efficiently than closed LLMs,

Based on the trend in Meta’s open Llama models, we expect the lag between the best open and closed models to shorten next year. Whether the gap will shrink or widen afterwards depends on whether open models remain a viable business strategy for leading developers like Meta.

Our overall finding is that in recent years, once-frontier AI capabilities are reached by open models with a lag of about one year

Source: Ben Cottier et al. (2024), "How Far Behind Are Open Models?". Published online at epoch.ai. Retrieved from: 'https://epoch.ai/blog/open-models-report' [online resource]

Personalizing the Google Gemini AI Assistant with Memory

I have recently started to experiment more with the Google Gemini AI Assistant since I am also a Google Workspace user. Google extended its trial offer from 14 days to 60 days for Workspace subscribers so I was curious to learn more.

This week Google expanded memory for the Gemini Advanced version (subscription required) that enables you to configure and store your preferences that the model will use to personalize interactions. The model will also adapt responses based on your stored context. You also have full control to easily view, edit, or delete any information you've shared.

To personalize Gemini, you simply ask it in a prompt to remember anything that is important to you such as how you want the output formatted, recent information, the tone of your output, and more. In upcoming posts, I will share more about how I use it and it’s value.

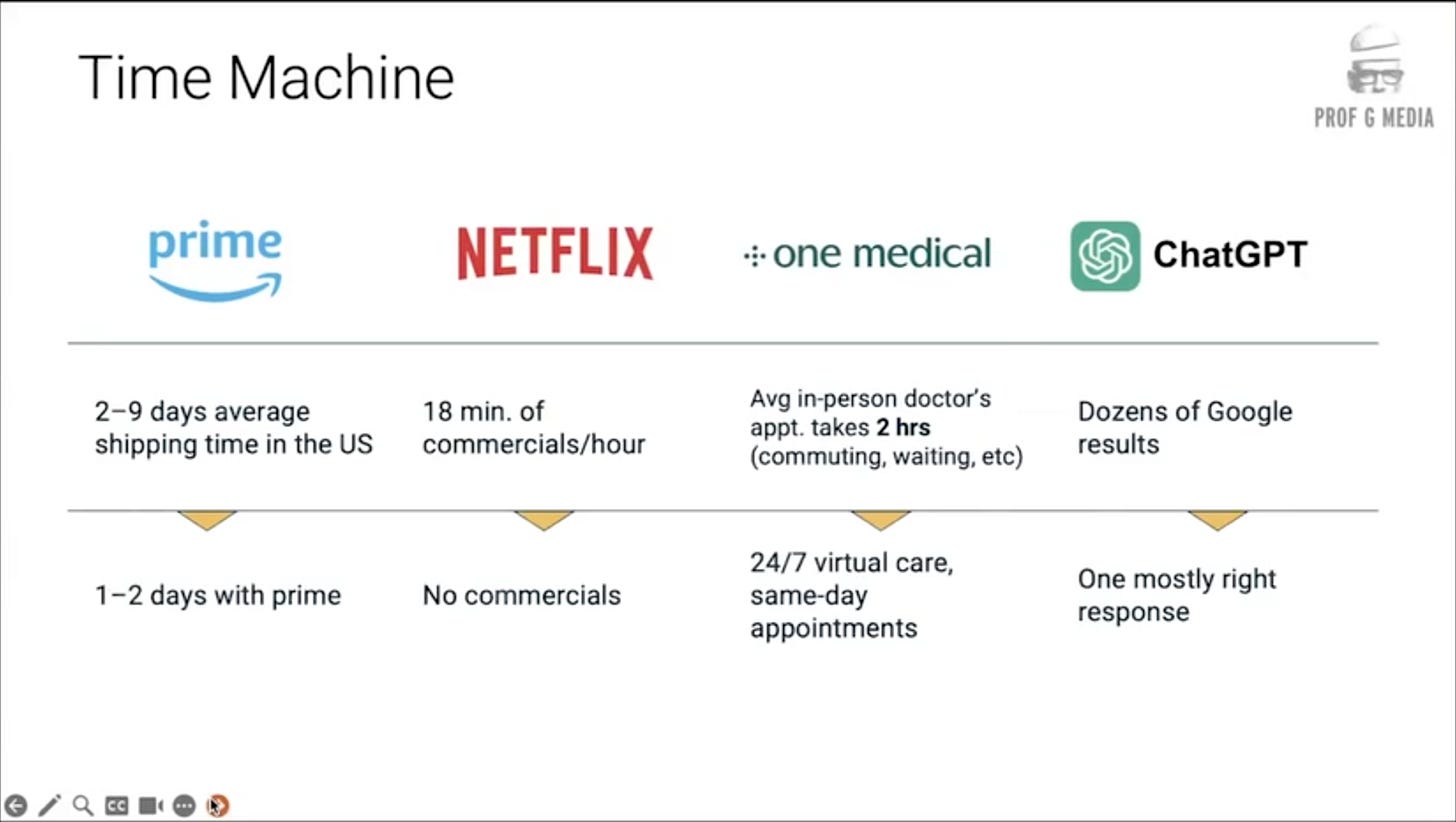

Using AI to Build Time Machines

I recently watched parts of the virtual AI: ROI Conference hosted by Prof G’s Section organization. There was one idea that Prof G spoke about that stuck in my head about how to use AI to create value - creating a “time machine.” Tune into around 7 minutes in where he talks about refining data into insights, and then transitions into this idea of the time machine. Positioning a value proposition on time saved through an AI-powered product or service is easily and quickly understood by anyone.

“If you want to build a company that’s worth more than $50 billion or you want to add $10 billion in a short amount of time in market value, they essentially, they all do the same thing. And that is, they build a time machine.” - Professor Scott Galloway

The AI Era: How Expertise and Competition Are Transforming Business

My focus has always a fusion of business and technology. The Harvard Business Review has been a valuable resource for me over 30 years to explore the business implications of technology strategy. You can subscribe to free articles in various categories including AI, machine learning and data.

I have summarized the key nuggets from the upcoming HBR article “Strategy in an Era of Abundant Expertise” - How to thrive when AI makes knowledge and know-how cheaper and easier to access.

The article underscores the impacts and strategic implications of AI making expertise cheaper and more widely available than ever.

The authors argue companies that take advantage of AI will benefit from what they call the “triple product”:

more-efficient operations

more-productive workforces, and

sharper, more-focused growth

Critically, they say leaders should be asking themselves these three questions:

Which aspects of the problem we now solve for customers will our customers solve for themselves using AI?

Which types of expertise that we currently possess will need to evolve most if we are to remain ahead of AI’s capabilities?

Which assets can we build or augment to enhance our ability to stay competitive as AI advances?

Key Takeaways

AI is redefining expertise and its cost

Expertise is the combination of deep theoretical knowledge and practical skills. AI tools now provide easy and affordable access to expansive expertise in various domains, enabling even small businesses to compete effectively.

As AI capabilities improve and costs decline, businesses must adapt quickly or risk losing relevance, as seen when Nokia’s hardware expertise became obsolete in the smartphone era dominated by software-driven competitors.

The “Triple Product” of AI

Companies adopting AI effectively gain three critical benefits:

Operational efficiency: AI automates repetitive tasks, enabling processes to run more smoothly and cost-effectively.

Workforce productivity: AI assistants elevate employees’ performance, bringing underperformers to average levels and allowing top performers to focus on creative, high-value tasks.

Sharper growth focus: By offloading non-core activities to AI, businesses can allocate more resources to areas that differentiate them in the market.

The Evolving Role of Expertise

Expertise required to stay competitive is expanding rapidly, while the cost of accessing it is dropping. This dual force shapes how businesses define their scope and manage operations.

Many companies are narrowing their focus to core competencies while outsourcing non-core activities to specialized platforms, using AI to enhance efficiency across the value chain.

AI as a Catalyst for Business Transformation

Early adopters like Moderna and FocusFuel demonstrate how AI enables businesses to streamline operations, reduce time-to-market, and focus on value-creating activities. For instance, AI assistants helped Moderna’s employees optimize processes, saving weeks of effort and enabling innovation.

AI adoption can also reduce onboarding time, broaden talent pools, and restructure organizational hierarchies as employees leverage AI agents for task execution.

Strategic Imperatives for the AI Era

To remain competitive, businesses must reimagine their strategies:

Identify what customers will solve with AI: For example, travel agencies need to pivot to offering unique experiences as customers increasingly rely on AI for itinerary planning.

Evolve core expertise: Focus on uniquely human capabilities like empathy, collaboration, and creativity that AI cannot replicate.

Build sustainable advantages: Invest in durable assets like customer relationships, brand equity, and proprietary networks that AI is unlikely to replace.

Getting Started with AI

Begin with targeted AI deployments in processes where benefits are well-documented (e.g., coding or customer service).

Establish AI safety and governance protocols to mitigate risks such as bias or misinformation.

Train employees to use AI effectively, creating a culture of continuous learning and innovation.

What This Means for Businesses

The AI era is not just about automating tasks but fundamentally transforming how expertise is accessed and deployed.

Companies that harness AI to streamline operations, elevate their workforce, and focus on unique value creation will thrive.

However, success demands strategic clarity, investment in durable advantages, and a commitment to reshaping organizational processes for an AI-enabled future.

In this era, the ability to adapt will define the winners.

Hands-On AI for High-Performance Leaders Cohort Course

My principle is that to learn AI you must use AI. I also believe that leaders and non-technical professionals that establish a base of technical acumen will deliver more impact as they draw connections between what AI technology is capable of, and how it can be woven into AI-enabled products and processes.

This is the idea behind a new Maven cohort course I have launched, “Hands-On AI for High-Performance Leaders.” By learning popular AI frameworks, tools, and platforms hands-on, this will accelerate technical understanding and demystify concepts you can put into practice.

Check out the course syllabus to enroll or subscribe to the waitlist to get announcements on future cohorts.